---

title: "RNA-seq workflow: gene-level exploratory analysis and differential expression"

subtitle: CSAMA 2019 version

author:

- name: Michael I. Love

affiliation:

- Department of Biostatistics, UNC-Chapel Hill, Chapel Hill, NC, US

- Department of Genetics, UNC-Chapel Hill, Chapel Hill, NC, US

- name: Charlotte Soneson

affiliation:

- Friedrich Miescher Institute for Biomedical Research, Switzerland

- SIB Swiss Institute of Bioinformatics, Switzerland

- name: Simon Anders

affiliation: Centre for Molecular Biology, Univ. Heidelberg (ZMBH), Germany

- name: Vladislav Kim

affiliation: &EMBL European Molecular Biology Laboratory (EMBL), Heidelberg, Germany

- name: Johannes Rainer

affiliation: Institute for Biomedicine, Eurac Research, Bolzano, Italy

- name: Wolfgang Huber

affiliation: *EMBL

date: 22 July 2019

output: BiocStyle::html_document

bibliography: bibliography.bib

vignette: >

%\VignetteIndexEntry{RNA-seq workflow at the gene level}

%\VignetteEngine{knitr::rmarkdown}

editor_options:

chunk_output_type: console

---

```{r style, echo=FALSE, message=FALSE, warning=FALSE, results="asis"}

library("BiocStyle")

library("knitr")

library("rmarkdown")

## options(width=70) # Andrzej said this is not needed

opts_chunk$set(message=FALSE, error=FALSE, warning=FALSE,

cache=TRUE, fig.width=5, fig.height=5, eval=FALSE)

```

# Abstract

We walk through an end-to-end gene-level RNA-seq differential

expression workflow using Bioconductor packages. We will start from

the FASTQ files, show how these were quantified with respect to a

reference transcriptome, and prepare a count matrix which tallies the

number of RNA-seq fragments mapped to each gene for each sample. We will

perform exploratory data analysis (EDA) for quality assessment and to

explore the relationship between samples, perform differential gene

expression analysis (DGE) and visually explore the results.

# Introduction

Bioconductor has many packages which support analysis of

high-throughput sequence data, including RNA sequencing (RNA-seq). The

packages which we will use in this workflow include core packages

maintained by the Bioconductor core team for storing genomic data sets

and working with gene annotations [@Huber2015Orchestrating]. We will

also use contributed packages for statistical analysis and

visualization of sequencing data.

](img/320px-Tomohiro_Oura_conductor.jpg)

Through scheduled releases every 6

months, the Bioconductor project ensures that all the packages within

a release will work together in harmony (hence the "conductor"

metaphor). The packages used in this workflow are loaded with the

*library* function and can be installed by following the Bioconductor

[installation instructions](https://bioconductor.org/install/#install-bioconductor-packages).

A published (but essentially similar) version of this workflow,

including reviewer reports and comments is available

at [F1000Research](http://f1000research.com/articles/4-1070).

If you have questions about this workflow or any Bioconductor

software, please post these to the

[Bioconductor support site](https://support.bioconductor.org/).

If the questions concern a specific package, you can tag the post with

the name of the package, or for general questions about the workflow,

tag the post with `rnaseqgene`. Note the

[posting guide](https://www.bioconductor.org/help/support/posting-guide/)

for crafting an optimal question for the support site.

## Experimental data

The data used in this workflow is stored in an R package, *airway2*,

containing quantification data for eight RNA-seq samples.

The quantification files summarize an RNA-seq experiment

wherein airway smooth muscle cells were treated with dexamethasone, a

synthetic glucocorticoid steroid with anti-inflammatory effects

[@Himes2014RNASeq]. Glucocorticoids are used, for example,

by people with asthma to reduce inflammation of the airways. In the

experiment, four primary human airway smooth muscle cell lines were treated with 1

micromolar dexamethasone for 18 hours. For each of the four cell

lines, we have a treated and an untreated sample. For more description

of the experiment see the

[PubMed entry 24926665](http://www.ncbi.nlm.nih.gov/pubmed/24926665)

and for raw data see the

[GEO entry GSE52778](http://www.ncbi.nlm.nih.gov/geo/query/acc.cgi?acc=GSE52778).

An alternative version of the workflow uses the `r Biocexptpkg("airway")`

package, but this version uses the newer

*airway2* because it contains *Salmon* quantification files, which

will be discussed later.

# Preparing count matrices

As input, the count-based statistical methods, such as

`r Biocpkg("DESeq2")` [@Love2014Moderated],

`r Biocpkg("edgeR")` [@Robinson2009EdgeR],

`r Biocpkg("limma")` with the voom method [@Law2014Voom],

`r Biocpkg("DSS")` [@Wu2013New],

`r Biocpkg("EBSeq")` [@Leng2013EBSeq] and

`r Biocpkg("baySeq")` [@Hardcastle2010BaySeq],

expect data as obtained, e.g., from RNA-seq or another

high-throughput sequencing experiment, in the form of a matrix of counts.

The value in the *i*-th row and the *j*-th column of

the matrix tells how many reads (or fragments, for paired-end RNA-seq)

have been assigned to gene *i* in sample *j*. Analogously, for other

types of assays, the rows of the matrix might correspond e.g., to

binding regions (with ChIP-Seq), species of bacteria (with metagenomic

datasets), or peptide sequences (with quantitative mass spectrometry).

The values in the matrix should be counts of sequencing

reads/fragments. This is important for the statistical models used by

*DESeq2* and *edgeR* to hold, as only counts allow assessing the

measurement precision correctly. It is important to not provide

counts that were pre-normalized for sequencing depth/library size, as

the statistical model is most powerful when applied to un-normalized

counts and is designed to account for library size differences

internally.

## Transcript abundances and the *tximport* pipeline

In this workflow, we will use transcript abundances as quantified by the

[Salmon](https://combine-lab.github.io/salmon/) [@Patro2017Salmon]

software package. *Salmon* and other methods, such as

[Sailfish](http://www.cs.cmu.edu/~ckingsf/software/sailfish/) [@Patro2014Sailfish],

[kallisto](https://pachterlab.github.io/kallisto/) [@Bray2016Near], or

[RSEM](http://deweylab.github.io/RSEM/) [@Li2011RSEM],

estimate the relative abundances of all (known, annotated) transcripts

without aligning reads. Because estimating the abundance of the

transcripts involves an inference step, the counts are *estimated*.

Most methods either use a statistical framework called

Expectation-Maximization or Bayesian techniques to estimate the

abundances and counts.

Following quantification, we will use the `r Biocpkg("tximeta")` (or `r

Biocpkg("tximport")` [@Soneson2015Differential])

package for assembling estimated count and offset matrices for use

with Bioconductor differential gene expression packages.

The advantages of using the transcript abundance quantifiers in

conjunction with *tximeta*/*tximport* to produce gene-level count matrices and

normalizing offsets, are: this approach corrects for any potential

changes in gene length across samples (e.g. from differential isoform

usage) [@Trapnell2013Differential]; some of these methods are

substantially faster and require less memory and disk usage compared

to alignment-based methods; and it is possible to avoid discarding

those fragments that can align to multiple genes with homologous

sequence [@Robert2015Errors]. Note that transcript abundance

quantifiers skip the generation of large files which store read

alignments (SAM or BAM files), instead producing smaller files which

store estimated abundances, counts and effective lengths per

transcript. For more details, see the manuscript describing this approach

[@Soneson2015Differential] and the `r Biocpkg("tximport")` package

vignette for software details.

A full tutorial on how to use the *Salmon* software for quantifying

transcript abundance can be

found [here](https://combine-lab.github.io/salmon/getting_started/),

but in the next section we will provide the specific command line code

that was used to quantify the eight samples that will be used in this workflow.

## *Salmon* quantification

We begin by providing *Salmon* with the sequence of all of the

reference transcripts, which we will call the *reference

transcriptome*. We used version 27 of the GENCODE human

transcripts, downloaded from

the [GENCODE website](https://www.gencodegenes.org/). As of writing,

GENCODE has already released newer versions, but for computational

reproducibility, all previous versions are available from the website.

On the command line, creating the transcriptome index looks like:

```

salmon index -i gencode.v27_salmon_0.8.2 -t gencode.v27.transcripts.fa.gz

```

The `0.8.2` refers to the version of *Salmon* that was used. As of writing,

*Salmon* is up to version 0.14.1 and it's always best to use the

latest version of software available.

To quantify an individual sample, `sample_01`, the following command

can be used:

```

salmon quant -i gencode.v27_salmon_0.8.2 -p 6 --libType A \

--gcBias --biasSpeedSamp 5 \

-1 sample_01_1.fastq.gz -2 sample_01_2.fastq.gz \

-o sample_01

```

In simple English, this command says to "quantify a sample using this

transcriptome index, with 6 threads, using automatic

[library type](http://salmon.readthedocs.io/en/latest/library_type.html) detection,

using GC bias correction (the bias speed part is now longer

needed with current versions of *Salmon*), here are the first and second

read, and use this output directory." The output directory will be

created if it doesn't exist, though if earlier parts of the path do

not exist, it will give an error. A single sample of human RNA-seq

usually takes ~5 minutes with the GC bias correction.

Rather than writing the above command on the command line, for

quantifying these eight samples, a simple

[R script](https://github.com/mikelove/airway2/blob/master/inst/scripts/salmon.R)

was written to perform the following *Salmon* calls from R, looping

over the samples. It is also possible to loop over files using a

bash loop, or more advanced workflow management

systems such as Snakemake [@Koster2012Snakemake] or Nextflow

[@Di2017Nextflow].

## Importing into R with *tximport*

Following quantification, we can use *tximport* to import the data

into R and perform statistical analysis using Bioconductor packages.

Normally, we would simply point *tximport* to the `quant.sf` files on

our machine. However, because we are distributing these files as part

of an R package, we have to do some extra steps, to figure out where

the R package, and so the files, are located on *your* machine.

The output directories from the above *Salmon* quantification calls has been

stored in the `extdata` directory of the R package called *airway2*.

```{r eval=FALSE}

library("devtools")

install_github("mikelove/airway2")

```

The R function *system.file* can be used to find out where on your

computer the files from a package have been installed. Here we ask for

the full path to the `extdata` directory, where R packages store

external data, that is part of the *airway2* package.

```{r}

library("airway2")

dir <- system.file("extdata", package="airway2")

dir

list.files(dir)

```

The quantification directories are in the `quants` directory.

```{r}

list.files(file.path(dir,"quants"))

```

The identifiers used here are the *SRR* identifiers from the

[Sequence Read Archive](https://www.ncbi.nlm.nih.gov/sra). For this

experiment, we downloaded a table that links the *SRR* identifiers to

the sample information about each experiment. From this

[Run Selector](https://www.ncbi.nlm.nih.gov/Traces/study/?acc=SRP033351) view

of the experiment, we clicked the button: *RunInfo Table*. This

downloads a file called `SraRunTable.txt`. We included this file in

the *airway2* package, and we read it in now:

```{r}

coldata <- read.delim(file.path(dir, "SraRunTable.txt"))

colnames(coldata)

idx <- c("Run","cell_line","treatment")

coldata[,idx]

```

For simplicity we restrict to these three columns, and do a little

cleanup of the column names and the levels of the factors.

```{r}

coldata <- coldata[,idx]

colnames(coldata) <- c("run","cell","dex")

coldata$cell

coldata$dex

```

We can rename the levels of the `dex` column, but we have to be

careful that our new level names correspond 1-1 to the previous

levels. Here we replace `Dexamethasone` with `trt` and `Untreated`

with `untrt`. There is nothing wrong with the longer names, but

sometimes shorter factor levels are easier to work with, and as long

as the shorter levels can be understood by other analysts, it saves

keystrokes.

```{r}

levels(coldata$dex)

levels(coldata$dex) <- c("trt","untrt")

levels(coldata$dex)

```

**Note:** it is prefered in R that the first level of a factor be the

reference level (e.g. control, or untreated samples), so we can

*relevel* the `dex` factor like so:

```{r}

coldata$dex <- relevel(coldata$dex, "untrt")

```

Now we can create a named vector that points to the *Salmon*

quantification files. Because we have gzipped the quants for

distribution, we point to `quant.sf.gz`. Normally would just be

`quant.sf`. (The importing software can import directly from gzipped

files.)

```{r}

files <- file.path(dir,"quants",coldata$run,"quant.sf.gz")

names(files) <- coldata$run

all(file.exists(files))

```

Because we perform a gene-level analysis we need the mapping of transcripts to

genes for GENCODE v27. Since GENCODE uses Ensembl transcript identifiers, we use

the `r Biocpkg("ensembldb")` package [@Rainer:2019jd] to extract these from one

of the available annotation databases. Pre-built `EnsDb` annotation databases

for all species for a large number of Ensembl releases are available through the

`r Biocpkg("AnnotationHub")` resource. We thus load below an `EnsDb` database

with human annotations for Ensembl release 91, which corresponds to GENCODE

v27. The parameter `localHub = TRUE` ensures that we list and load only locally

stored resources, due to the very limited internet connectivity at the lab site.

```{r annotationhub, message = FALSE}

library("AnnotationHub")

library("ensembldb")

ah <- AnnotationHub(localHub = TRUE)

ah_91 <- query(ah, "EnsDb.Hsapiens.v91")

ah_91

edb <- ah[[names(ah_91)]]

```

We next define a *data.frame* that connects transcripts to genes. Note that the

information in `tx2gene` needs to directly match the reference transcriptome

used by *Salmon*.

```{r}

tx2gene <- transcripts(edb, columns = c("tx_id_version", "gene_id", "tx_id"),

return.type = "data.frame")

colnames(tx2gene) <- c("TXNAME", "GENEID", "tx_id")

head(tx2gene)

```

Finally the following line of code imports *Salmon* transcript

quantifications into R, collapsing to the gene level using the

information in `tx2gene`.

```{r}

library("tximport")

library("jsonlite")

library("readr")

txi <- tximport(files, type="salmon", tx2gene=tx2gene)

```

The `txi` object is simply a list of matrices (and one character

vector):

```{r}

names(txi)

txi$counts[1:3,1:3]

txi$length[1:3,1:3]

txi$abundance[1:3,1:3]

txi$countsFromAbundance

```

## *Alternative*: Importing into R with *tximeta*

In the previous section we showed how to import the quantifications from

*Salmon* into a list of matrices in R, using the *tximport* package. The

*tximeta* package provides a wrapper around *tximport*, which in addition to

importing the quantifications also generates a *SummarizedExperiment* object

(see below). Moreover, it automatically identifies the transcriptome that was

used for the quantification, and downlaods the corresponding annotation

information. Note that this requires an internet connection. Here, we will

illustrate how to use the *tximeta* package to import the data above.

The input to *tximeta* is a data frame with sample information. This data frame

must contain at least two columns, named `names` and `files`, and containing the

sample IDs and the paths to the *Salmon* output files, respectively. Since we

already have the `coldata` table above, we just have to rename the `Run` column

and add the paths to the *Salmon* files.

```{r}

coldata2 <- coldata

colnames(coldata2)[colnames(coldata2) == "run"] <- "names"

coldata2$files <- file.path(dir,"quants",coldata2$names,"quant.sf.gz")

all(file.exists(coldata2$files))

coldata2

```

Next, we call *tximeta*, using the sample information data frame as input

argument.

```{r}

library("tximeta")

(txim <- tximeta(coldata = coldata2))

```

By default, `tximeta` returns a `SummarizedExperiment` object with

transcript-level quantifications. Since we are interested in gene-level

differential expression analysis, we summarize the abundances on the gene level.

```{r}

(txim <- summarizeToGene(txim))

```

## Branching point

At this point, we have quantified the RNA-seq fragments and summarized

to gene-level counts and abundances. This is a branching point where we

could use a variety of Bioconductor packages for exploration and

differential expression of the count data, including

`r Biocpkg("edgeR")` [@Robinson2009EdgeR],

`r Biocpkg("limma")` with the voom method [@Law2014Voom],

`r Biocpkg("DSS")` [@Wu2013New],

`r Biocpkg("EBSeq")` [@Leng2013EBSeq] and

`r Biocpkg("baySeq")` [@Hardcastle2010BaySeq].

@Schurch2016How

[compared performance](https://www.ncbi.nlm.nih.gov/pmc/articles/pmid/27022035/)

of different statistical methods

for RNA-seq using a large number of biological replicates and can help

users to decide which tools make sense to use, and how many

biological replicates are necessary to obtain a certain sensitivity.

We will continue using `r Biocpkg("DESeq2")` [@Love2014Moderated] and

`r Biocpkg("edgeR")` [@Robinson2009EdgeR] below.

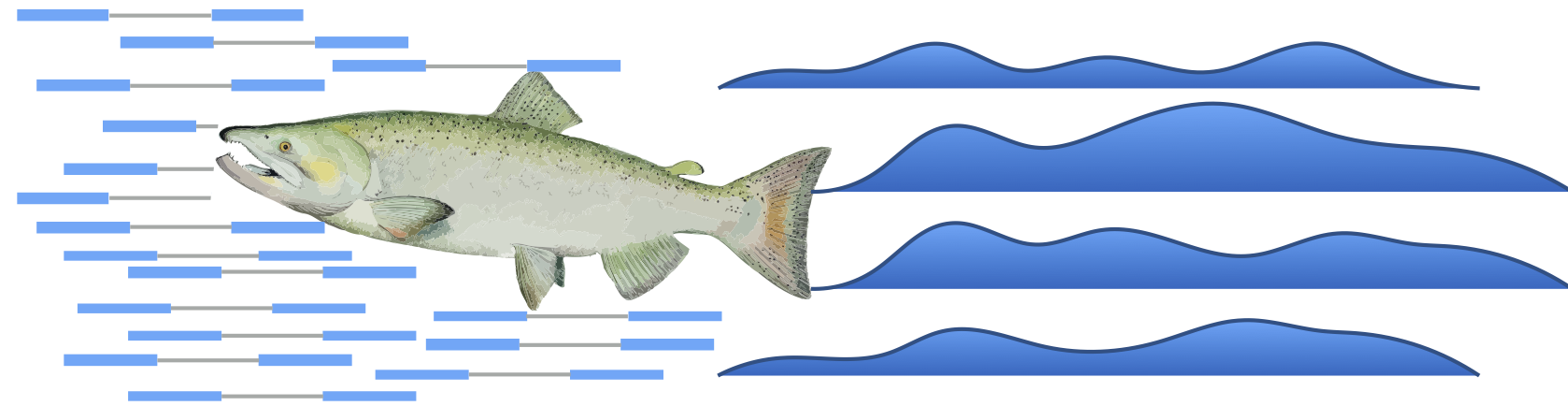

## SummarizedExperiment

The *DESeq2* package will store the data and intermediate results in

an object called a *DESeqDataSet*. This object builds on top of a

general Bioconductor class of object called the

*SummarizedExperiment*. We will therefore first introduce the

*SummarizedExperiment* and we begin with a diagram of the three

component pieces:

```{r sumexp, echo = FALSE, out.width="80%", fig.cap="The component parts of a *SummarizedExperiment* object. The 'assay' (pink block) contains the matrix of counts, the 'rowRanges' or 'rowData' (blue block) contains information about the genomic ranges and the 'colData' (green block) contains information about the samples. The highlighted line in each block represents the first row (note that the first row of 'colData' lines up with the first column of the 'assay')."}

par(mar = c(0,0,0,0))

plot(1,1,xlim = c(0,100),ylim = c(0,100),bty = "n",

type="n",xlab="",ylab="",xaxt="n",yaxt="n")

polygon(c(45,90,90,45),c(5,5,70,70),col="pink",border=NA)

polygon(c(45,90,90,45),c(68,68,70,70),col="pink3",border=NA)

text(67.5,40,"assay")

text(67.5,35,'e.g. "counts"')

polygon(c(10,40,40,10),c(5,5,70,70),col="skyblue",border=NA)

polygon(c(10,40,40,10),c(68,68,70,70),col="skyblue3",border=NA)

text(25,40,"rowRanges,")

text(25,35,"or rowData")

polygon(c(45,90,90,45),c(75,75,95,95),col="palegreen",border=NA)

polygon(c(45,47,47,45),c(75,75,95,95),col="palegreen3",border=NA)

text(67.5,85,"colData")

```

The *SummarizedExperiment* container is diagrammed in the Figure above

and discussed in the latest Bioconductor paper [@Huber2015Orchestrating].

In our case we have created a single matrix named `"counts"` that

contains the estimated counts for each gene and sample, which is

stored in `assay`. It is also possible to store multiple matrices,

accessed with `assays`. The `rowData` for our object will keep track

of the intermediate calculations for each gene: e.g. the mean of

normalized counts, the dispersion estimates, the estimated

coefficients and their standard error, etc. We can also include

information about the location of each gene, in which case, we would

use `rowRanges` to access the *GenomicRanges* or *GenomicRangesList*

associated with each gene.The component parts of the

*SummarizedExperiment* are accessed with an R function of the same

name: `assay` (or `assays`), `rowRanges`/`rowData` and `colData`.

# The *DESeqDataSet* object, sample information and the design formula

Bioconductor software packages often define and use a custom class for

storing data that makes sure that all the needed data slots are

consistently provided and fulfill the requirements. In addition,

Bioconductor has general data classes (such as the

*SummarizedExperiment*) that can be used to move data between

packages. Additionally, the core Bioconductor classes provide useful

functionality: for example, subsetting or reordering the rows or

columns of a *SummarizedExperiment* automatically subsets or reorders

the associated *rowData* and *colData*, which can help to prevent

accidental sample swaps that would otherwise lead to spurious

results. With *SummarizedExperiment* this is all taken care of behind

the scenes.

In *DESeq2*, the custom class is called *DESeqDataSet*. One of the

main differences with *SummarizedExperiment* is that we will use the

*counts* function to access the `assay` matrix, and it is required

that this matrix stores non-negative integers (this is to prevent

mistaken use of the package for non-count data).

A second difference is that the *DESeqDataSet* has an associated

*design formula*. The experimental design is specified at the

beginning of the analysis, as it will inform many of the *DESeq2*

functions how to treat the samples in the analysis (one exception is

the size factor estimation, i.e., the adjustment for differing library

sizes, which does not depend on the design formula). The design

formula tells which columns in the sample information table (`colData`)

specify the experimental design and how these factors should be used

in the analysis.

The simplest design formula for differential expression would be

`~ condition`, where `condition` is a column in `colData(dds)` that

specifies which of two (or more groups) the samples belong to. For

the airway experiment, we will specify `~ cell + dex`

meaning that we want to test for the effect of dexamethasone (`dex`)

controlling for the effect of different cell line (`cell`).

We can construct a *DESeqDataSet* object from the *tximport* object

using the function *DESeqDataSetFromTximport*:

```{r}

library("DESeq2")

dds <- DESeqDataSetFromTximport(txi, coldata, design = ~cell + dex)

```

For running *DESeq2* or *edgeR* models, you can use R's formula notation to

express any fixed-effects experimental design.

Note that *DESeq2* and *edgeR* use the same formula notation as, for instance, the *lm*

function of base R. If the research aim is to determine for which

genes the effect of treatment is different across groups, then

interaction terms can be included and tested using a design such as

`~ group + treatment + group:treatment`. See the manual page for

`?results` for more examples.

## Creating a DGEList for use with *edgeR*

The *edgeR* package uses another type of data container,

namely a *DGEList* object. It is just as easy to create a *DGEList* object using

the count matrix and information about samples. We can additionally add

information about the genes:

```{r}

genetable <- data.frame(gene.id = rownames(txi$counts))

```

Because we are using counts from *tximport*, we have a slightly

different procedure to import them, which involves calculating a

statistical *offset* that will account for differences in gene length

across samples. The following code is from the *tximport* vignette in

the *edgeR* section.

```{r message=FALSE}

library("edgeR")

cts <- txi$counts

normMat <- txi$length

normMat <- normMat/exp(rowMeans(log(normMat)))

o <- log(calcNormFactors(cts/normMat)) + log(colSums(cts/normMat))

```

Actually creating the *DGEList* object simply entails providing the

counts, sample information, gene and information. The second line adds

an appropriate offset.

```{r}

dge <- DGEList(counts = cts,

samples = coldata,

genes = genetable)

dge <- scaleOffset(dge, t(t(log(normMat)) + o))

names(dge)

```

A much simpler approach with respect to the amount of code is to generate

counts-from-abundance using *tximport*, which correct for the gene

length changes directly. Example for this code is:

```{r}

txi2 <- tximport(files, type="salmon", tx2gene=tx2gene,

countsFromAbundance="lengthScaledTPM")

dge2 <- DGEList(counts = txi2$counts,

samples = coldata,

genes = genetable)

names(dge2)

```

The *counts + offset* method and the *counts-from-abundance* method

are not statistically identical (obviously), but tend to output

roughly similar groups of genes. In this dataset, using

counts-from-abundance has 96% of genes in common with *count + offset*

at a target 5% FDR.

Just like the *SummarizedExperiment* and the *DESeqDataSet* the

*DGEList* contains all the information we need: the count matrix,

information about the samples (columns of the count matrix), and

information about the genes (rows of the count matrix).

# Exploratory analysis and visualization

There are two separate paths in this workflow; the one we will

see first involves *transformations of the counts*

in order to visually explore sample relationships.

In the second part, we will go back to the original raw counts

for *statistical testing*. This is critical because

the statistical testing methods rely on original count data

(not scaled or transformed) for calculating the precision of measurements.

## Pre-filtering the dataset

Our count matrix with our *DESeqDataSet* contains many rows with only zeros, and

additionally many rows with only a few fragments in total. In order to reduce

the size of the object, and to increase the speed of our functions, we can

remove the rows that have no or nearly no information about the amount of gene

expression. Here we apply the most minimal filtering rule: removing rows of the

*DESeqDataSet* that have no counts, or only a single count across all samples.

Additional weighting/filtering to improve power is applied at a later step in

the workflow.

```{r}

nrow(dds)

dds <- dds[ rowSums(counts(dds)) > 1, ]

nrow(dds)

```

Here, we also filter the *DGEList* for *edgeR* in the same way. Note, however,

that *edgeR* does not apply filtering internally, and for this reason, typically

a stronger prefiltering criterion is used at this stage for *edgeR*.

```{r}

dge <- dge[rowSums(round(dge$counts)) > 1, ]

all(rownames(dge) == rownames(dds))

```

We use the above code mostly for aiding comparison with *DESeq2* in

later sections. The recommended filtering code for *edgeR* (here not

evaluated) is as follows:

```{r, eval=FALSE}

dge <- dge[filterByExpr(dge),]

```

## Variance stabilizing transformations

Many common statistical methods for exploratory analysis of

multidimensional data, for example clustering and *principal

components analysis* (PCA), work best for data that generally has the

same range of variance at different ranges of the mean values. When

the expected amount of variance is approximately the same across

different mean values, the data is said to be *homoskedastic*. For

RNA-seq counts, however, the expected variance grows with the mean. For

example, if one performs PCA directly on a matrix of

counts or normalized counts (e.g. correcting for differences in

sequencing depth), the resulting plot typically depends mostly

on the genes with *highest* counts because they show the largest

absolute differences between samples. A simple and often used

strategy to avoid this is to take the logarithm of the normalized

count values plus a pseudocount of 1; however, depending on the

choice of pseudocount, now the genes with the very *lowest* counts

will contribute a great deal of noise to the resulting plot, because

taking the logarithm of small counts actually inflates their variance.

We can quickly show this property of counts with some simulated

data (here, Poisson counts with a range of lambda from 0.1 to 100).

We plot the standard deviation of each row (genes) against the mean:

```{r meanSdCts, fig.cap="SD over mean plot for Poisson-distributed counts."}

lambda <- 10^seq(from = -1, to = 2, length = 1000)

cts <- matrix(rpois(1000*100, lambda), ncol = 100)

library("vsn")

meanSdPlot(cts, ranks = FALSE)

```

And for logarithm-transformed counts after adding a pseudocount of 1:

```{r meanSdLogCts, fig.cap="SD over mean plot for log(x+1) transformed counts."}

log.cts.one <- log2(cts + 1)

meanSdPlot(log.cts.one, ranks = FALSE)

```

The logarithm with a small pseudocount amplifies differences when the

values are close to 0. The low count genes with low signal-to-noise

ratio will overly contribute to sample-sample distances and PCA

plots.

As a solution, *DESeq2* offers two transformations for count data that

stabilize the variance across the mean: (1) the

the *variance stabilizing transformation* (VST)

for negative binomial data with a dispersion-mean trend

[@Anders2010Differential], implemented in the *vst* function,

and (2) the *regularized-logarithm transformation* or *rlog*

[@Love2014Moderated].

For genes with high counts, the VST and rlog will give similar result

to the ordinary log2 transformation of normalized counts. For genes

with lower counts, however, the values are shrunken towards the genes'

averages across all samples. The VST or rlog-transformed data then

becomes approximately homoskedastic, and can be used directly for

computing distances between samples, making PCA plots, or as input to

downstream methods which perform best with homoskedastic data.

**Which transformation to choose?** Mike tends to prefer the VST. The

VST is much faster to compute via the function *vst* and is less

sensitive to high count outliers than the rlog. Perhaps further

development on the rlog would make it less sensitive to outliers.

(Why have the rlog at all then? In the DESeq2 paper, the rlog

sometimes outperformed the VST in terms of recovering clusters when

there was a large range of sequencing depth across samples e.g. x10

from lowest to highest sequencing depth.)

Note that the two transformations offered by DESeq2 are provided for

applications *other* than differential testing.

For differential testing we recommend the

*DESeq* function applied to raw counts, as described later

in this workflow, which also takes into account the dependence of the

variance of counts on the mean value during the dispersion estimation

step.

Both transformation functions return an object based on the *SummarizedExperiment*

class that contains the transformed values in its *assay* slot.

```{r vst, fig.cap="SD over mean plot for VST values from counts used in this workflow."}

vsd <- vst(dds, blind = FALSE)

head(assay(vsd), 3)

meanSdPlot(assay(vsd), ranks = FALSE)

```

```{r rlog, fig.cap="SD over mean plot for rlog-transformed counts from this workflow."}

rld <- rlog(dds, blind = FALSE)

head(assay(rld), 3)

meanSdPlot(assay(rld), ranks = FALSE)

```

In the above function calls, we specified `blind = FALSE`, which means

that differences between cell lines and treatment (the variables in

the design) will not contribute to the expected variance-mean trend of

the experiment. The experimental design is not used directly in the

transformation, only in estimating the global amount of variability in

the counts. For a fully *unsupervised* transformation, one can set

`blind = TRUE` (which is the default).

To show the effect of the transformation, in the figure below we plot

the first sample against the second, first simply using the *log2*

function of normalized counts (after adding 1, to avoid taking the log

of zero), and then using the VST and rlog-transformed values.

```{r transform-plot, out.width="100%", fig.width=6, fig.height=2.5, fig.cap="Scatterplot of transformed counts from two samples. Shown are scatterplots using the log2 transform of normalized counts (left), using the VST (middle), and using the rlog (right). While the rlog is on roughly the same scale as the log2 counts, the VST has a upward shift for the smaller values. It is the differences between samples (deviation from y = x in these scatterplots) which will contribute to the distance calculations and the PCA plot."}

library("dplyr")

library("ggplot2")

library("hexbin")

ntd <- normTransform(dds)

df <- bind_rows(

as_data_frame(assay(ntd)[, 1:2]) %>% mutate(transformation = "log2(x + 1)"),

as_data_frame(assay(vsd)[, 1:2]) %>% mutate(transformation = "vst"),

as_data_frame(assay(rld)[, 1:2]) %>% mutate(transformation = "rlog"))

colnames(df)[1:2] <- c("x", "y")

ggplot(df, aes(x = x, y = y)) + geom_hex(bins = 80) +

coord_fixed() + facet_grid(. ~ transformation)

```

We can see how genes with low counts (bottom left-hand corner) seem to

be excessively variable on the ordinary logarithmic scale, while the

VST and rlog compress differences for the low count genes

for which the data provide little information about differential

expression.

## Sample distances

A useful first step in an RNA-seq analysis is often to assess overall

similarity between samples: Which samples are similar to each other,

which are different? Does this fit to the expectation from the

experiment's design?

We use the R function *dist* to calculate the Euclidean distance

between samples. To ensure we have a roughly equal contribution from

all genes, we use it on the VST data. We need to

transpose the matrix of values using *t*, because the *dist* function

expects the different samples to be rows of its argument, and

different dimensions (here, genes) to be columns.

```{r}

sampleDists <- dist(t(assay(vsd)))

sampleDists

```

We visualize the distances in a heatmap in a figure below, using the function

*pheatmap* from the `r CRANpkg("pheatmap")` package.

```{r}

library("pheatmap")

library("RColorBrewer")

```

In order to plot the sample distance matrix with the rows/columns

arranged by the distances in our distance matrix,

we manually provide `sampleDists` to the `clustering_distance`

argument of the *pheatmap* function.

Otherwise the *pheatmap* function would assume that the matrix contains

the data values themselves, and would calculate distances between the

rows/columns of the distance matrix, which is not desired.

We also manually specify a blue color palette using the

*colorRampPalette* function from the `r CRANpkg("RColorBrewer")` package.

```{r distheatmap, fig.width = 6.1, fig.height = 4.5, fig.cap="Heatmap of sample-to-sample distances using the VST values."}

sampleDistMatrix <- as.matrix(sampleDists)

rownames(sampleDistMatrix) <- paste(vsd$dex, vsd$cell, sep = " - ")

colnames(sampleDistMatrix) <- NULL

colors <- colorRampPalette(rev(brewer.pal(9, "Blues")))(255)

pheatmap(sampleDistMatrix,

clustering_distance_rows = sampleDists,

clustering_distance_cols = sampleDists,

col = colors)

```

Note that we have changed the row names of the distance matrix to

contain treatment type and patient number instead of sample ID, so

that we have all this information in view when looking at the heatmap.

Another option for calculating sample distances is to use the

*Poisson Distance* [@Witten2011Classification], implemented in the

`r CRANpkg("PoiClaClu")` package.

This measure of dissimilarity between counts

also takes the inherent variance

structure of counts into consideration when calculating the distances

between samples. The *PoissonDistance* function takes the original

count matrix (not normalized) with samples as rows instead of columns,

so we need to transpose the counts in `dds`.

```{r}

library("PoiClaClu")

poisd <- PoissonDistance(t(counts(dds)))

```

We plot the heatmap in a Figure below.

```{r poisdistheatmap, fig.width = 6.1, fig.height = 4.5, fig.cap="Heatmap of sample-to-sample distances using the *Poisson Distance*."}

samplePoisDistMatrix <- as.matrix(poisd$dd)

rownames(samplePoisDistMatrix) <- paste(vsd$dex, vsd$cell, sep = " - ")

colnames(samplePoisDistMatrix) <- NULL

pheatmap(samplePoisDistMatrix,

clustering_distance_rows = poisd$dd,

clustering_distance_cols = poisd$dd,

col = colors)

```

## PCA plot

Another way to visualize sample-to-sample distances is a

principal components analysis (PCA). In this ordination method, the

data points (here, the samples) are projected onto the 2D plane

such that they spread out in the two directions that explain most of

the differences (figure below). The x-axis is the direction that separates the data

points the most. The values of the samples in this direction are

written *PC1*. The y-axis is a direction (it must be *orthogonal* to

the first direction) that separates the data the second most. The

values of the samples in this direction are written *PC2*.

The percent of the total variance that is contained in the direction

is printed in the axis label. Note that these percentages do not add to

100%, because there are more dimensions that contain the remaining

variance (although each of these remaining dimensions will explain

less than the two that we see).

```{r plotpca, fig.width = 6, fig.height = 4.5, fig.cap="PCA plot using the VST values. Each unique combination of treatment and cell line is given its own color."}

plotPCA(vsd, intgroup = c("dex", "cell"))

```

Here, we have used the function *plotPCA* that comes with *DESeq2*.

The two terms specified by `intgroup` are the interesting groups for

labeling the samples; they tell the function to use them to choose

colors. We can also build the PCA plot from scratch using the

`r CRANpkg("ggplot2")` package [@Wickham2009Ggplot2].

This is done by asking the *plotPCA* function

to return the data used for plotting rather than building the plot.

See the *ggplot2* [documentation](http://docs.ggplot2.org/current/)

for more details on using *ggplot*.

```{r}

pcaData <- plotPCA(vsd, intgroup = c("dex", "cell"), returnData = TRUE)

pcaData

percentVar <- round(100 * attr(pcaData, "percentVar"))

```

We can then use these data to build up a second plot in a figure below, specifying that the

color of the points should reflect dexamethasone treatment and the

shape should reflect the cell line.

```{r ggplotpca, fig.width = 6, fig.height = 4.5, fig.cap="PCA plot using the VST values with custom *ggplot2* code. Here we specify cell line (plotting symbol) and dexamethasone treatment (color)."}

ggplot(pcaData, aes(x = PC1, y = PC2, color = dex, shape = cell)) +

geom_point(size = 3) +

xlab(paste0("PC1: ", percentVar[1], "% variance")) +

ylab(paste0("PC2: ", percentVar[2], "% variance")) +

coord_fixed()

```

From the PCA plot, we see that the differences between cells (the

different plotting shapes) are considerable, though not stronger than the differences due to

treatment with dexamethasone (red vs blue color). This shows why it will be important to

account for this in differential testing by using a paired design

("paired", because each dex treated sample is paired with one

untreated sample from the *same* cell line). We are already set up for

this design by assigning the formula `~ cell + dex` earlier.

## MDS plot

Another plot, very similar to the PCA plot, can be made using the

*multidimensional scaling* (MDS) function in base R. This is useful when we

don't have a matrix of data, but only a matrix of distances. Here we

compute the MDS for the distances calculated from the VST data

and plot these in a figure below.

```{r mdsvst, fig.width = 6, fig.height = 4.5, fig.cap="MDS plot using VST values."}

mds <- as.data.frame(colData(vsd)) %>%

cbind(cmdscale(sampleDistMatrix))

ggplot(mds, aes(x = `1`, y = `2`, color = dex, shape = cell)) +

geom_point(size = 3) + coord_fixed()

```

In a figure below we show the same plot for the *PoissonDistance*:

```{r mdspois, fig.width = 6, fig.height = 4.5, fig.cap="MDS plot using the *Poisson Distance*."}

mdsPois <- as.data.frame(colData(dds)) %>%

cbind(cmdscale(samplePoisDistMatrix))

ggplot(mdsPois, aes(x = `1`, y = `2`, color = dex, shape = cell)) +

geom_point(size = 3) + coord_fixed()

```

# Differential expression analysis

## Performing differential expression testing with *DESeq2*

As we have already specified an experimental design when we created

the *DESeqDataSet*, we can run the differential expression pipeline on

the raw counts with a single call to the function *DESeq*:

```{r airwayDE}

dds <- DESeq(dds)

```

This function will print out a message for the various steps it

performs. These are described in more detail in the manual page for

*DESeq*, which can be accessed by typing `?DESeq`. Briefly these are:

the estimation of size factors (controlling for differences in the

sequencing depth of the samples), the estimation of

dispersion values for each gene, and fitting a generalized linear model.

A *DESeqDataSet* is returned that contains all the fitted

parameters within it, and the following section describes how to

extract out results tables of interest from this object.

## Building the results table

Calling *results* without any arguments will extract the estimated

log2 fold changes and *p* values for the last variable in the design

formula. If there are more than 2 levels for this variable, *results*

will extract the results table for a comparison of the last level over

the first level. The comparison is printed at the top of the output:

`dex trt vs untrt`.

```{r}

res <- results(dds)

res

```

We could have equivalently produced this results table with the

following more specific command. Because `dex` is the last variable in

the design, we could optionally leave off the `contrast` argument to extract

the comparison of the two levels of `dex`.

```{r}

res <- results(dds, contrast = c("dex", "trt", "untrt"))

```

As `res` is a *DataFrame* object, it carries metadata

with information on the meaning of the columns:

```{r}

mcols(res, use.names = TRUE)

```

The first column, `baseMean`, is a just the average of the normalized

count values, divided by the size factors, taken over all samples in the

*DESeqDataSet*.

The remaining four columns refer to a specific contrast, namely the

comparison of the `trt` level over the `untrt` level for the factor

variable `dex`. We will find out below how to obtain other contrasts.

The column `log2FoldChange` is the effect size estimate. It tells us

how much the gene's expression seems to have changed due to treatment

with dexamethasone in comparison to untreated samples. This value is

reported on a logarithmic scale to base 2: for example, a log2 fold

change of 1.5 means that the gene's expression is increased by a

multiplicative factor of \(2^{1.5} \approx 2.82\).

Of course, this estimate has an uncertainty associated with it, which

is available in the column `lfcSE`, the standard error estimate for

the log2 fold change estimate. We can also express the uncertainty of

a particular effect size estimate as the result of a statistical

test. The purpose of a test for differential expression is to test

whether the data provides sufficient evidence to conclude that this

value is really different from zero. *DESeq2* performs for each gene a

*hypothesis test* to see whether evidence is sufficient to decide

against the *null hypothesis* that there is zero effect of the treatment

on the gene and that the observed difference between treatment and

control was merely caused by experimental variability (i.e., the type

of variability that you can expect between different

samples in the same treatment group). As usual in statistics, the

result of this test is reported as a *p* value, and it is found in the

column `pvalue`. Remember that a *p* value indicates the probability

that a fold change as strong as the observed one, or even stronger,

would be seen under the situation described by the null hypothesis.

We can also summarize the results with the following line of code,

which reports some additional information, that will be covered in

later sections.

```{r}

summary(res)

```

Note that there are many genes with differential expression due to

dexamethasone treatment at the FDR level of 10%. This makes sense, as

the smooth muscle cells of the airway are known to react to

glucocorticoid steroids. However, there are two ways to be more strict

about which set of genes are considered significant:

* lower the false discovery rate threshold (the threshold on `padj` in

the results table)

* raise the log2 fold change threshold from 0 using the `lfcThreshold`

argument of *results*

If we lower the false discovery rate threshold, we should also

inform the `results()` function about it, so that the function can use this

threshold for the optimal independent filtering that it performs:

```{r}

res.05 <- results(dds, alpha = 0.05)

table(res.05$padj < 0.05)

```

If we want to raise the log2 fold change threshold, so that we test

for genes that show more substantial changes due to treatment, we

simply supply a value on the log2 scale. For example, by specifying

`lfcThreshold = 1`, we test for genes that show significant effects of

treatment on gene counts more than doubling or less than halving,

because \(2^1=2\).

```{r}

resLFC1 <- results(dds, lfcThreshold = 1)

table(resLFC1$padj < 0.05)

```

Sometimes a subset of the *p* values in `res` will be `NA` ("not

available"). This is *DESeq*'s way of reporting that all counts for

this gene were zero, and hence no test was applied. In addition, *p*

values can be assigned `NA` if the gene was excluded from analysis

because it contained an extreme count outlier. For more information,

see the outlier detection section of the *DESeq2* vignette.

## Independent hypothesis weighting within *DESeq2*

The *results* function in *DESeq2* integrates with a new method for

dealing with genes that have low counts and therefore low power for

detecting differential gene expression. This method is called

Independent Hypothesis Weighting (IHW) [@Ignatiadis2016], and

generalizes the idea of filtering low count genes to reduce the

multiple testing burden. While the specifics of the

method are beyond the scope of this workflow, we demonstrate its usage

here:

```{r}

library("IHW")

resIHW <- results(dds, filterFun=ihw)

summary(resIHW)

```

The results are similar but not identical to the results table built

above, which uses a simple optimization of a filter over mean

normalized counts. The new procedure results in a gain of 50 genes.

```{r}

table(old=res$padj < .1, IHW=resIHW$padj < .1)

```

We can plot the weighting function which is fit by the *IHW* package:

```{r ihw-plot, fig.cap="IHW weighting function."}

plot(metadata(resIHW)$ihwResult)

```

## Other comparisons

In general, the results for a comparison of any two levels of a

variable can be extracted using the `contrast` argument to

*results*. The user should specify three values: the name of the

variable, the name of the level for the numerator, and the name of the

level for the denominator. Here we extract results for the log2 of the

fold change of one cell line over another:

```{r}

results(dds, contrast = c("cell", "N061011", "N61311"))

```

There are additional ways to build results tables for certain

comparisons after running *DESeq* once.

If results for an interaction term are desired, the `name`

argument of *results* should be used. Please see the

help page for the *results* function for details on the additional

ways to build results tables. In particular, the **Examples** section of

the help page for *results* gives some pertinent examples.

## Annotation of RNA-seq results

One of the central steps in the analysis of genomic data is the annotation of

the quantified entities to biologically more relevant representations such as

transcripts, genes or proteins. Such annotations enable for example pathway

enrichment analyses and ease the interpretation of the results. Bioconductor

provides a large variety of annotation packages and resources, most of them

supporting the `r Biocpkg("AnnotationDbi")` interface which enables a unified

way to retrieve annotations for given identifiers. The annotation resources from

Bioconductor range from web-based tools such as `r Biocpkg("biomaRt")` to

packages working with pre-built databases containing all identifier mappings for

a certain species (the `*.org` packages such as `org.Hs.eg.db`) or providing

gene and transcript models, such as databases build by

`r Biocpkg("GenomicFeatures")` (`TxDb` databases/packages) and

`r Biocpkg("ensembldb")` (`EnsDb` databases/packages) [@Rainer:2019jd].

In this section we use the `ensembldb` package to annotate all tested genes

which are identified by Ensembl gene IDs. The Salmon quantification used in the

analysis leading to this table was based on GENCODE v27 transcripts which is

linked to Ensembl release 91. We thus load below an `EnsDb` database with human

annotations for Ensembl release 91 from the `r Biocpkg("AnnotationHub")`

resource. The parameter `localHub = TRUE` ensures that we list and load only

locally stored resources (due to the very limited internet connectivity at the

lab site).

```{r annotationhub-ensdb, message = FALSE}

library("AnnotationHub")

library("ensembldb")

ah <- AnnotationHub(localHub = TRUE)

ah_91 <- query(ah, "EnsDb.Hsapiens.v91")

ah_91

#' Load the database

edb <- ah[[names(ah_91)]]

```

If we had a working internet connection we could simply list all available

`EnsDb` databases with `query(ah, "EnsDb")` (after loading `AnnotationHub` with

`localHub = FALSE`). We can now use the `edb` variable to access the data in

the database. With the `listColumns` function we can for example list all of the

available annotation fields (columns) in the database.

```{r listcolumns}

listColumns(edb)

```

We could get data from any of these database columns by passing the respective

column name(s) along with the `column` parameter to any function retrieving

annotations from an `EnsDb` database. Gene-related annotations can be retrieved

with the `genes` function, that by default (and similar to the `genes` function

from the `r Biocpkg("GenomicFeatures")` package) returns a `GRanges` object with

the genomic coordinates of the genes and additional annotation columns in the

`GRanges`' *metadata* columns. Below we use the `genes` call to retrieve

annotations for all human genes. With the parameter `return.type = "DataFrame"`

we tell the function to return the result as a `DataFrame` instead of the

default `GRanges`.

```{r annotations-all-genes}

anns <- genes(edb, return.type = "DataFrame")

rownames(anns) <- anns$gene_id

```

We can now combine the RNA-seq result with the annotation.

```{r annotation-combine}

resAnn <- BiocGenerics::cbind(anns[BiocGenerics::rownames(res), ], res)

resAnn

```

If we had only a reduced set of genes to annotate, e.g. the set of the most

significant genes, it is faster and more elegant to extract annotations for the

subset of genes using the filter framework from `ensembldb`. Below we create a

*top table* by selecting the 20 genes with the smallest p-values.

```{r res-top20}

resTop20 <- res[order(res$pvalue), ][1:20, ]

```

To get only annotations for the selected genes we pass the filter expression

`~ gene_id == rownames(resTop20)` with the `filter` parameter to the `genes`

function. Filter expressions have to be written in the form of a formula

(i.e. starting with `~`) and support any logical R expression and any database

column/field in the `EnsDb` database (use `supportedFilters(edb)` to list all

supported fields). Also, by specifying the respective field names with the

`columns` parameter we extract only the gene name (equivalent with the HGNC

symbol for most genes), description the gene biotype and the NCBI Entrezgene ID

from the database.

```{r annotations-top20}

anns <- genes(edb, filter = ~ gene_id == rownames(resTop20),

columns = c("gene_name", "description", "gene_biotype",

"entrezid"), return.type = "DataFrame")

```

Note that the order of the genes in the returned `data.frame` is **not** the

same as in `resTop20`. We thus below re-order the annotations to match the

order of genes in the top table and subsequently join the two data frames.

```{r join-resTop20}

resTop20 <- cbind(anns[match(rownames(resTop20), anns$gene_id), ],

resTop20)

```

Be aware that mappings between Ensembl and NCBI identifiers is not necessarily a

1:1 mapping. In our case we got for some Ensembl gene IDs more than one NCBI

Entrezgene ID. The column `"entrezid"` in our result table is thus a `list` with

eventually more than one Entrezgene ID in one row. Exporting such a table could

be problematic and we collapse therefore Entrezgene IDs in this columns into a

single character string, with multiple IDs separated by a semicolon (`";"`).

```{r collapse-entrezid}

resTop20$entrezid <- sapply(resTop20$entrezid, function(z) {

if (any(is.na(z))) z

else paste(z, collapse = ";")

})

```

The 20 most significantly regulated genes in the present analysis are listed

below.

```{r resTop20Table, results = "asis", echo = FALSE}

knitr::kable(resTop20)

```

### Using *ensembldb* to query for genes encoding a specific protein domain

For some experiments it might be interesting to search for genes, or rather

transcripts, that encode a protein with a certain protein domain. For the

present data set we could for example search for genes with proteins encoding

the ligand binding domain of nuclear hormone receptors (such as the

glucocorticoid receptor, the gene activated by treatment with the synthetic

glucocorticoid dexamethasone and hence being mainly responsible for the

transcriptional changes observed in the present dataset). Below we thus query

the database for all such genes using the protein domain ID

[PF00104](http://pfam.xfam.org/family/PF00104) from Pfam. The filter used in the

query below will return all genes with a transcript annotated to the specific

protein domain restricting to genes that are detected in the present analysis.

```{r protein-domain-query}

hormoneR <- genes(edb,

filter = ~ protein_domain_id == "PF00104" &

gene_id == rownames(res),

return.type = "data.frame")

rownames(hormoneR) <- hormoneR$gene_id

nrow(hormoneR)

```

How many genes were identified? Next we identify nuclear

hormone receptors which are significantly differentially expressed at a 5% FDR

in the present data set.

```{r hormone-receptors-res}

resHormoneR <- res[hormoneR$gene_id, ]

resHormoneR <- resHormoneR[which(resHormoneR$padj < 0.05), ]

resHormoneR <- cbind(hormoneR[rownames(resHormoneR), ],

resHormoneR)

```

The table below lists the significantly differentially expressed hormone

receptors.

```{r hormone-receptors-res-table, results = "asis"}

knitr::kable(resHormoneR[order(resHormoneR$pvalue),

c("gene_name", "padj", "log2FoldChange")])

```

Among the regulated hormone receptors are NR4A3, encoding a member of the

steroid-thyroid hormone-retinoid receptor superfamily (4-fold upregulated), but

also the glucocorticoid receptor (NR3C1) itself, which is known to down-regulate

in certain cell types its own gene as part of a negative feedback loop.

## Performing differential expression testing with *edgeR*

Next we will show how to perform differential expression analysis with *edgeR*.

Recall that we have a `DGEList` `dge`, containing three objects:

```{r}

names(dge)

```

We first define a design matrix, using the same formula syntax as for *DESeq2*

above.

```{r}

design <- model.matrix(~ cell + dex, dge$samples)

```

Then, we calculate normalization factors and estimate the dispersion for each

gene. Note that we need to specify the design in the dispersion calculation.

```{r}

dge <- calcNormFactors(dge)

dge <- estimateDisp(dge, design)

```

Finally, we fit the generalized linear model and perform the test. In the

`glmQLFTest` function, we indicate which coefficient (which column in the design

matrix) that we would like to test for. It is possible to test more general

contrasts as well, and the user guide contains many examples on how to do this.

The `topTags` function extracts the top-ranked genes. You can indicate the

adjusted p-value cutoff, and/or the number of genes to keep.

```{r edgeRcall}

fit <- glmQLFit(dge, design)

qlf <- glmQLFTest(fit, coef = ncol(design))

tt <- topTags(qlf, n = nrow(dge), p.value = 0.1)

tt10 <- topTags(qlf) # just the top 10 by default

tt10

```

We can compare to see how the results from the two software

overlap:

```{r}

tt.all <- topTags(qlf, n = nrow(dge), sort.by = "none")

table(DESeq2 = res$padj < 0.1, edgeR = tt.all$table$FDR < 0.1)

```

We can also compare the two lists by the ranks:

```{r plot-ranks, fig.cap="Comparison of genes ranked by *DESeq2* and *edgeR*."}

common <- !is.na(res$padj)

plot(rank(res$padj[common]),

rank(tt.all$table$FDR[common]), cex = .1,

xlab = "DESeq2", ylab = "edgeR")

```

Also with edgeR we can test for significance relative to a fold-change

threshold, using the function `glmTreat` instead of `glmLRT`. Below we set the

log fold-change threshold to 1 (i.e., fold change threshold equal to 2), as for

DESeq2 above. We also compare the results to those obtained with DESeq2.

```{r}

treatres <- glmTreat(fit, coef = ncol(design), lfc = 1)

tt.treat <- topTags(treatres, n = nrow(dge), sort.by = "none")

table(DESeq2 = resLFC1$padj < 0.1, edgeR = tt.treat$table$FDR < 0.1)

```

## Citing packages used in research

If you use the results from an R analysis package in published

research, you can find the proper citation for the software by typing

`citation("pkgName")`, where you would substitute the name of the

package for `pkgName`. Citing methods papers helps to support and

reward the individuals who put time into open source software for

genomic data analysis.

# Plotting results

## Counts plot with *DESeq2*

A quick way to visualize the counts for a particular gene is to use

the *plotCounts* function that takes as arguments the

*DESeqDataSet*, a gene name, and the group over which to plot the

counts (figure below).

```{r plotcounts, fig.cap="Normalized counts for a single gene over treatment group."}

topGene <- rownames(res)[which.min(res$padj)]

plotCounts(dds, gene = topGene, intgroup = c("dex"))

```

We can also make custom plots using the *ggplot* function from the

`r CRANpkg("ggplot2")` package (figures below).

```{r ggplotcountsjitter, out.width="80%", fig.width = 4, fig.height = 3, fig.cap="Normalized counts using ggbeeswarm"}

library("ggbeeswarm")

geneCounts <- plotCounts(dds, gene = topGene, intgroup = c("dex","cell"),

returnData = TRUE)

ggplot(geneCounts, aes(x = dex, y = count, color = cell)) +

scale_y_log10() + geom_beeswarm(cex = 3)

```

```{r ggplotcountsgroup, out.width="80%", fig.width = 4, fig.height = 3, fig.cap="Normalized counts with lines connecting cell lines. Note that the *DESeq* test actually takes into account the cell line effect, so this figure more closely depicts the difference being tested."}

ggplot(geneCounts, aes(x = dex, y = count, color = cell, group = cell)) +

scale_y_log10() + geom_point(size = 3) + geom_line()

```

## MA plot with *DESeq2*

An *MA plot* [@Dudoit2002Statistical] provides a useful overview for

the distribution of the estimated coefficients in the model,

e.g. the comparisons of interest, across all genes.

On the y-axis, the "M" stands for "minus" --

subtraction of log values is equivalent to the log of the ratio -- and

on the x-axis, the "A" stands for "average". You may hear this plot

also referred to as a mean-difference plot, or a Bland-Altman plot.

Before making the MA plot, we use the

*lfcShrink* function to shrink the log2 fold changes for the

comparison of dex treated vs untreated samples. This typically takes

about 20 seconds on a laptop.

```{r plotma, out.width="80%", fig.cap="An MA plot of changes induced by treatment. The log2 fold change for a particular comparison is plotted on the y-axis and the average of the counts normalized by size factor is shown on the x-axis. Each gene is represented with a dot. Genes with an adjusted *p* value below a threshold (here 0.1, the default) are shown in red."}

library("apeglm")

resultsNames(dds)

res <- lfcShrink(dds, coef="dex_trt_vs_untrt", type="apeglm")

DESeq2::plotMA(res, xlim=c(5,1e6), ylim=c(-5,5))

```

The *DESeq2* package uses a Bayesian procedure to moderate (or

"shrink") log2 fold changes from genes with very low counts and highly

variable counts, as can be seen by the narrowing of the vertical

spread of points on the left side of the MA plot. As shown above, the

*lfcShrink* function performs this operation. For a detailed

explanation of the rationale of moderated fold changes, please see the

*DESeq2* paper [@Love2014Moderated].

If we had not used statistical moderation to shrink the noisy log2

fold changes, we would have instead seen the following plot:

```{r plotmaNoShr, out.width="80%", fig.cap="MA plot without shrinkage."}

res.noshr <- results(dds)

DESeq2::plotMA(res.noshr, xlim=c(5,1e6), ylim=c(-5,5))

```

We can label individual points on the MA plot as well. Here we use the

*with* R function to plot a circle and text for a selected row of the

results object. Within the *with* function, only the `baseMean` and

`log2FoldChange` values for the selected rows of `res` are used.

```{r plotmalabel, out.width="80%", fig.cap="Example of labeling an individual gene on an MA plot."}

DESeq2::plotMA(res, xlim=c(5,1e6), ylim=c(-5,5))

topGene <- rownames(res)[which.min(res$padj)]

with(res[topGene, ], {

points(baseMean, log2FoldChange, col = "dodgerblue", cex = 2, lwd = 2)

text(baseMean, log2FoldChange, topGene, pos = 2, col = "dodgerblue")

})

```

Another useful diagnostic plot is the histogram of the *p* values

(figure below). This plot is best formed by excluding genes with very

small counts, which otherwise generate spikes in the histogram.

```{r histpvalue2, fig.cap="Histogram of *p* values for genes with mean normalized count larger than 1."}

hist(res$pvalue[res$baseMean > 1], breaks = 0:20/20,

col = "grey50", border = "white")

```

## Counts plot with *edgeR*

As we made a counts plot previously, we can also make this with the

top gene from the *edgeR* analysis:

```{r plot-counts-edger, fig.cap="CPM per sample for the top gene from the *edgeR* analysis."}

cpms <- cpm(dge)

topGene <- as.character(tt10$table$gene.id[1])

ord <- order(dge$samples$dex)

col <- as.integer(dge$samples$dex[ord])

par(mar=c(10,4,4,2))

barplot(cpms[topGene,ord],

col=c("skyblue","orange")[col],

names.arg=paste(dge$sample$dex[ord],"--",dge$sample$cell[ord]),

las=2, ylab="counts per million (CPM)")

```

## MA / Smear plot with *edgeR*

In *edgeR*, the MA plot is obtained via the `plotSmear` function.

```{r plot-smear, out.width="80%", fig.cap="The *edgeR* package includes the `plotSmear` function for generating MA plots."}

plotSmear(qlf, de.tags = tt$table$gene.id)

```

## Gene clustering

In the sample distance heatmap made previously, the dendrogram at the

side shows us a hierarchical clustering of the samples. Such a

clustering can also be performed for the genes. Since the clustering

is only relevant for genes that actually carry a signal, one usually

would only cluster a subset of the most highly variable genes. Here,

for demonstration, let us select the 20 genes with the highest

variance across samples. We will work with the VST data:

```{r}

library("genefilter")

topVarGenes <- head(order(rowVars(assay(vsd)), decreasing = TRUE), 20)

```

The heatmap becomes more interesting if we do not look at absolute

expression strength but rather at the amount by which each gene

deviates in a specific sample from the gene's average across all

samples. Hence, we center each genes' values across samples,

and plot a heatmap (figure below). We provide a *data.frame* that instructs the

*pheatmap* function how to label the columns.

```{r genescluster, out.width="100%", fig.cap="Heatmap of relative VST values across samples. Treatment status and cell line information are shown with colored bars at the top of the heatmap. Blocks of genes that covary across patients. Note that a set of genes at the top of the heatmap are separating the N061011 cell line from the others. In the center of the heatmap, we see a set of genes for which the dexamethasone treated samples have higher gene expression."}

mat <- assay(vsd)[ topVarGenes, ]

mat <- mat - rowMeans(mat)

anno <- as.data.frame(colData(vsd)[, c("cell","dex")])

pheatmap(mat, annotation_col = anno)

```

## Exporting results

You can easily save the results table in a CSV file that you can

then share or load with a spreadsheet program such as Excel. The call to

*as.data.frame* is necessary to convert the *DataFrame* object

(`r Biocpkg("IRanges")` package) to a *data.frame* object that can be

processed by *write.csv*. Here, we take just the top 100 genes for

demonstration.

```{r eval = FALSE}

resOrderedDF <- as.data.frame(res[order(res$pvalue), ])[1:100, ]

write.csv(resOrderedDF, file = "results.csv")

```

A more sophisticated way for exporting results the Bioconductor

package `r Biocpkg("ReportingTools")` [@Huntley2013ReportingTools].

*ReportingTools* will automatically generate dynamic HTML documents,

including links to external databases using gene identifiers

and boxplots summarizing the normalized counts across groups.

See the *ReportingTools* vignettes for full details. The simplest

version of creating a dynamic *ReportingTools* report is performed

with the following code:

```{r eval = FALSE}

library("ReportingTools")

htmlRep <- HTMLReport(shortName = "report", title = "My report",

reportDirectory = "./report")

publish(resOrderedDF %>% tibble::rownames_to_column("gene"), htmlRep)

url <- finish(htmlRep)

browseURL(url)

```

## Exploring results interactively

The `r Biocpkg("iSEE")` package enables interactive exploration of any data

stored in a *SummarizedExperiment* container. Since the *DESeqDataSet* class

directly extends *SummarizedExperiment*, it can also be used as input to *iSEE*.

To open an *iSEE* instance, we simply type

```{r}

library("iSEE")

if (interactive()) {

iSEE(dds)

}

```

We can also add additional information, such as the results of the statistical

test, to the *rowData* of the object before providing it to *iSEE*.

```{r}

stopifnot(all(rownames(dds) == rownames(res)))

res$neglog10pvalue <- -log10(res$pvalue)

rowData(dds)$res <- res

if (interactive()) {

iSEE(dds)

}

```

# Removing hidden batch effects

Suppose we did not know that there were different cell lines involved

in the experiment, only that there was treatment with

dexamethasone. The cell line effect on the counts then would represent

some hidden and unwanted variation that might be affecting

many or all of the genes in the dataset. We can use statistical

methods designed for RNA-seq from the `r Biocpkg("sva")` package

[@Leek2014Svaseq] or the `r Biocpkg("RUVSeq")` package

[@Risso2014Normalization] in Bioconductor to

detect such groupings of the samples, and then we can add these to the

*DESeqDataSet* design, in order to account for them.

The *SVA* package uses the term *surrogate variables* for the estimated

variables that we want to account for in our analysis, while the RUV

package uses the terms *factors of unwanted variation* with the acronym "Remove Unwanted

Variation" explaining the package title. We first use *SVA* to find

hidden batch effects and then *RUV* following.

## Using SVA with DESeq2

```{r}

library("sva")

```

Below we obtain a matrix of normalized counts for which the average count across

samples is larger than 1. As we described above, we are trying to

recover any hidden batch effects, supposing that we do not know the

cell line information. So we use a full model matrix with the

*dex* variable, and a reduced, or null, model matrix with only

an intercept term. Finally we specify that we want to estimate 2

surrogate variables. For more information read the manual page for the

*svaseq* function by typing `?svaseq`.

```{r}

dat <- counts(dds, normalized = TRUE)

idx <- rowMeans(dat) > 1

dat <- dat[idx, ]

mod <- model.matrix(~ dex, colData(dds))

mod0 <- model.matrix(~ 1, colData(dds))

svseq <- svaseq(dat, mod, mod0, n.sv = 2)

svseq$sv

```

Because we actually do know the cell lines, we can see how well the

SVA method did at recovering these variables (figure below).

```{r svaplot, fig.cap="Surrogate variables 1 and 2 plotted over cell line. Here, we know the hidden source of variation (cell line), and therefore can see how the SVA procedure is able to identify a source of variation which is correlated with cell line."}

par(mfrow = c(2, 1), mar = c(3,5,3,1))

for (i in 1:2) {

stripchart(svseq$sv[, i] ~ dds$cell, vertical = TRUE, main = paste0("SV", i))

abline(h = 0)

}

```

Finally, in order to use SVA to remove any effect on the counts from

our surrogate variables, we simply add these two surrogate variables

as columns to the *DESeqDataSet* and then add them to the design:

```{r}

ddssva <- dds

ddssva$SV1 <- svseq$sv[,1]

ddssva$SV2 <- svseq$sv[,2]

design(ddssva) <- ~ SV1 + SV2 + dex

```

We could then produce results controlling for surrogate variables by

running *DESeq* with the new design.

## Using RUV with DESeq2

We can also use the *RUV* method in the *RUVSeq* package to detect the

hidden batch effects.

```{r}

library("RUVSeq")

```

We can use the `RUVg` function to estimate *factors of unwanted

variation*, analogous to *SVA*'s *surrogate variables*. A difference

compared to the *SVA* procedure above, is that we first would run

*DESeq* and *results* to obtain the p-values for the analysis without

knowing about the batches, e.g. just `~ dex`. Supposing that we have

this results table `res`, we then pull out a set of *empirical control

genes* by looking at the genes that do not have a small p-value.

```{r}

set <- newSeqExpressionSet(counts(dds))

idx <- rowSums(counts(set) > 5) >= 2

set <- set[idx, ]

set <- betweenLaneNormalization(set, which="upper")

not.sig <- rownames(res)[which(res$pvalue > .1)]

empirical <- rownames(set)[ rownames(set) %in% not.sig ]

set <- RUVg(set, empirical, k=2)

pData(set)

```

We can plot the factors estimated by *RUV*:

```{r ruvplot, fig.cap="Factors of unwanted variation plotted over cell line."}

par(mfrow = c(2, 1), mar = c(3,5,3,1))

for (i in 1:2) {

stripchart(pData(set)[, i] ~ dds$cell, vertical = TRUE, main = paste0("W", i))

abline(h = 0)

}

```

As before, if we wanted to control for these factors, we simply

add them to the *DESeqDataSet* and to the design:

```{r}

ddsruv <- dds

ddsruv$W1 <- set$W_1

ddsruv$W2 <- set$W_2

design(ddsruv) <- ~ W1 + W2 + dex

```

We would then run *DESeq* with the new design to re-estimate the

parameters and results.

# Session information

As the last part of this document, we call the function *sessionInfo*,

which reports the version numbers of R and all the packages used in

this session. It is good practice to always keep such a record of this

as it will help to track down what has happened in case an R script

ceases to work or gives different results because the functions have

been changed in a newer version of one of your packages. By including

it at the bottom of a script, your reports will become more reproducible.

The session information should also *always*

be included in any emails to the

[Bioconductor support site](https://support.bioconductor.org) along

with all code used in the analysis.

```{r}

devtools::session_info()

```

# References