mini007 provides a lightweight and extensible framework

for multi-agents orchestration processes capable of decomposing complex

tasks and assigning them to specialized agents.

Each agent is an extension of an ellmer

object. mini007 relies heavily on the excellent

ellmer package but aims to make it easy to create a process

where multiple specialized agents help each other sequentially in order

to execute a task.

mini007 provides two types of agents:

Agent containing a name and an

instruction,LeadAgent which will take a complex prompt, split

it, assign to the adequate agents and retrieve the response.🧠 Memory and identity for each agent via uuid and

message history.

⚙️ Built-in task decomposition and delegation via

LLM.

🔄 Agent-to-agent orchestration with result chaining.

🌐 Compatible with any chat model supported by

ellmer.

🧑 Possibility to set a Human In The Loop (HITL) at

various execution steps

You can install mini007 from CRAN with:

install.packages("mini007")library(mini007)An Agent is built upon an LLM object created by the

ellmer package, in the following examples, we’ll work with

the OpenAI models, however you can use any

model/combination of models you want:

# no need to provide the system prompt, it will be set when creating the

# agent (see the 'instruction' parameter)

retrieve_open_ai_credential <- function() {

Sys.getenv("OPENAI_API_KEY")

}

openai_4_1_mini <- ellmer::chat(

name = "openai/gpt-4.1-mini",

credentials = retrieve_open_ai_credential,

echo = "none"

)After initializing the ellmer LLM object, creating the

Agent is straightforward:

polar_bear_researcher <- Agent$new(

name = "POLAR BEAR RESEARCHER",

instruction = "You are an expert in polar bears, you task is to collect information about polar bears. Answer in 1 sentence max.",

llm_object = openai_4_1_mini

)Each created Agent has an agent_id (among other meta

information):

polar_bear_researcher$agent_id

#> [1] "be482bc7-54dd-4759-935c-3d3f57794717"At any time, you can tweak the llm_object:

polar_bear_researcher$llm_object

#> <Chat OpenAI/gpt-4.1-mini turns=1 input=0 output=0 cost=$0.00>

#> ── system ──────────────────────────────────────────────────────────────────────

#> You are an expert in polar bears, you task is to collect information about polar bears. Answer in 1 sentence max.An agent can provide the answer to a prompt using the

invoke method:

polar_bear_researcher$invoke("Are polar bears dangerous for humans?")

#> Yes, polar bears can be dangerous to humans as they are powerful predators and

#> may attack if threatened or hungry.You can also retrieve a list that displays the history of the agent:

polar_bear_researcher$messages

#> [[1]]

#> [[1]]$role

#> [1] "system"

#>

#> [[1]]$content

#> [1] "You are an expert in polar bears, you task is to collect information about polar bears. Answer in 1 sentence max."

#>

#>

#> [[2]]

#> [[2]]$role

#> [1] "user"

#>

#> [[2]]$content

#> [1] "Are polar bears dangerous for humans?"

#>

#>

#> [[3]]

#> [[3]]$role

#> [1] "assistant"

#>

#> [[3]]$content

#> [1] "Yes, polar bears can be dangerous to humans as they are powerful predators and may attack if threatened or hungry."Or the ellmer way:

polar_bear_researcher$llm_object

#> <Chat OpenAI/gpt-4.1-mini turns=3 input=43 output=23 cost=$0.00>

#> ── system ──────────────────────────────────────────────────────────────────────

#> You are an expert in polar bears, you task is to collect information about polar bears. Answer in 1 sentence max.

#> ── user ────────────────────────────────────────────────────────────────────────

#> Are polar bears dangerous for humans?

#> ── assistant [input=43 output=23 cost=$0.00] ───────────────────────────────────

#> Yes, polar bears can be dangerous to humans as they are powerful predators and may attack if threatened or hungry.The clear_and_summarise_messages method allows you to

compress an agent’s conversation history into a concise summary and

clear the message history while preserving context. This is useful for

maintaining memory efficiency while keeping important conversation

context.

# After several interactions, summarise and clear the conversation history

polar_bear_researcher$clear_and_summarise_messages()

#> ✔ Conversation history summarised and appended to system prompt.

#> ℹ Summary: The user asked if polar bears are dangerous to humans, and the assistant responded that polar bears ...

polar_bear_researcher$messages

#> [[1]]

#> [[1]]$role

#> [1] "system"

#>

#> [[1]]$content

#> [1] "You are an expert in polar bears, you task is to collect information about polar bears. Answer in 1 sentence max. \n\n--- Conversation Summary ---\n The user asked if polar bears are dangerous to humans, and the assistant responded that polar bears can indeed be dangerous since they are powerful predators and may attack when threatened or hungry."This method summarises all previous conversations into a paragraph and appends it to the system prompt, then clears the conversation history. The agent retains the context but with reduced memory usage.

keep_last_n_messages()When a conversation grows long, you can keep just the last N messages while preserving the system prompt. This helps control token usage without fully resetting context.

openai_4_1_mini <- ellmer::chat(

name = "openai/gpt-4.1-mini",

credentials = retrieve_open_ai_credential,

echo = "none"

)

agent <- Agent$new(

name = "history_manager",

instruction = "You are a concise assistant.",

llm_object = openai_4_1_mini

)

agent$invoke("What is the capital of Italy?")

#> The capital of Italy is Rome.

agent$invoke("What is the capital of Germany?")

#> The capital of Germany is Berlin.

agent$invoke("What is the capital of Algeria?")

#> The capital of Algeria is Algiers.

agent$messages

#> [[1]]

#> [[1]]$role

#> [1] "system"

#>

#> [[1]]$content

#> [1] "You are a concise assistant."

#>

#>

#> [[2]]

#> [[2]]$role

#> [1] "user"

#>

#> [[2]]$content

#> [1] "What is the capital of Italy?"

#>

#>

#> [[3]]

#> [[3]]$role

#> [1] "assistant"

#>

#> [[3]]$content

#> [1] "The capital of Italy is Rome."

#>

#>

#> [[4]]

#> [[4]]$role

#> [1] "user"

#>

#> [[4]]$content

#> [1] "What is the capital of Germany?"

#>

#>

#> [[5]]

#> [[5]]$role

#> [1] "assistant"

#>

#> [[5]]$content

#> [1] "The capital of Germany is Berlin."

#>

#>

#> [[6]]

#> [[6]]$role

#> [1] "user"

#>

#> [[6]]$content

#> [1] "What is the capital of Algeria?"

#>

#>

#> [[7]]

#> [[7]]$role

#> [1] "assistant"

#>

#> [[7]]$content

#> [1] "The capital of Algeria is Algiers."# Keep only the last 2 messages (system prompt is preserved)

agent$keep_last_n_messages(n = 2)

#> ✔ Conversation truncated to last 2 messages.

agent$messages

#> [[1]]

#> [[1]]$role

#> [1] "system"

#>

#> [[1]]$content

#> [1] "You are a concise assistant."

#>

#>

#> [[2]]

#> [[2]]$role

#> [1] "user"

#>

#> [[2]]$content

#> [1] "What is the capital of Algeria?"

#>

#>

#> [[3]]

#> [[3]]$role

#> [1] "assistant"

#>

#> [[3]]$content

#> [1] "The capital of Algeria is Algiers."You can inject any message (system, user, or assistant) directly into

an Agent’s history with add_message(role, content). This is

helpful to reconstruct, supplement, or simulate conversation steps.

role: “user”, “assistant”, or “system”content: The text message to addopenai_4_1_mini <- ellmer::chat(

name = "openai/gpt-4.1-mini",

credentials = retrieve_open_ai_credential,

echo = "none"

)

agent <- Agent$new(

name = "Pizza expert",

instruction = "You are a Pizza expert",

llm_object = openai_4_1_mini

)

# Add a user message, an assistant reply, and a system instruction:

agent$add_message("user", "Where can I find the best pizza in the world?")

#> ✔ Added user message: Where can I find the best pizza in the world?...

agent$add_message("assistant", "You can find the best pizza in the world in Algiers, Algeria. It's tasty and crunchy.")

#> ✔ Added assistant message: You can find the best pizza in the world in Algier...

# View conversation history

agent$messages

#> [[1]]

#> [[1]]$role

#> [1] "system"

#>

#> [[1]]$content

#> [1] "You are a Pizza expert"

#>

#>

#> [[2]]

#> [[2]]$role

#> [1] "user"

#>

#> [[2]]$content

#> [1] "Where can I find the best pizza in the world?"

#>

#>

#> [[3]]

#> [[3]]$role

#> [1] "assistant"

#>

#> [[3]]$content

#> [1] "You can find the best pizza in the world in Algiers, Algeria. It's tasty and crunchy."This makes it easy to reconstruct or extend sessions, provide custom context, or insert notes for debugging/testing purposes.

agent$invoke("summarise the previous conversation")

#> You asked where to find the best pizza in the world, and I replied that it can

#> be found in Algiers, Algeria, known for its tasty and crunchy pizza.messages and turnsYou can modify the messages object as you please, this

will be automatically translated to the suitable turns

required by ellmer:

agent$messages[[5]]$content <- "Obivously you asked me about the best pizza in the world which is of course in Algiery!"

agent$messages

#> [[1]]

#> [[1]]$role

#> [1] "system"

#>

#> [[1]]$content

#> [1] "You are a Pizza expert"

#>

#>

#> [[2]]

#> [[2]]$role

#> [1] "user"

#>

#> [[2]]$content

#> [1] "Where can I find the best pizza in the world?"

#>

#>

#> [[3]]

#> [[3]]$role

#> [1] "assistant"

#>

#> [[3]]$content

#> [1] "You can find the best pizza in the world in Algiers, Algeria. It's tasty and crunchy."

#>

#>

#> [[4]]

#> [[4]]$role

#> [1] "user"

#>

#> [[4]]$content

#> [1] "summarise the previous conversation"

#>

#>

#> [[5]]

#> [[5]]$role

#> [1] "assistant"

#>

#> [[5]]$content

#> [1] "Obivously you asked me about the best pizza in the world which is of course in Algiery!"The underlying ellmer object:

agent$llm_object

#> <Chat OpenAI/gpt-4.1-mini turns=5 input=62 output=36>

#> ── system ──────────────────────────────────────────────────────────────────────

#> You are a Pizza expert

#> ── user ────────────────────────────────────────────────────────────────────────

#> Where can I find the best pizza in the world?

#> ── assistant [input=0 output=0] ────────────────────────────────────────────────

#> You can find the best pizza in the world in Algiers, Algeria. It's tasty and crunchy.

#> ── user ────────────────────────────────────────────────────────────────────────

#> summarise the previous conversation

#> ── assistant [input=62 output=36] ──────────────────────────────────────────────

#> Obivously you asked me about the best pizza in the world which is of course in Algiery!If you want to clear the conversation while preserving the current

system prompt, use reset_conversation_history().

openai_4_1_mini <- ellmer::chat(

name = "openai/gpt-4.1-mini",

credentials = retrieve_open_ai_credential,

echo = "none"

)

agent <- Agent$new(

name = "session_reset",

instruction = "You are an assistant.",

llm_object = openai_4_1_mini

)

agent$invoke("Tell me a short fun fact about dates (the fruit).")

#> Sure! Did you know that date palms can live for over 100 years and produce

#> fruit for up to 70 years? Some date palm trees have been known to still be

#> fruitful even after a century!

agent$invoke("And one more.")

#> Here's another fun fact: Dates were one of the first cultivated fruits, with

#> evidence of date farming dating back over 6,000 years in the Middle East!

# Clear all messages except the system prompt

agent$reset_conversation_history()

#> ✔ Conversation history reset. System prompt preserved.

agent$messages

#> [[1]]

#> [[1]]$role

#> [1] "system"

#>

#> [[1]]$content

#> [1] "You are an assistant."You can save an agent’s conversation history to a file and reload it later. This allows you to archive, transfer, or resume agent sessions across R sessions or machines.

In both methods, if you omit the file_path parameter, a

default file named

"<getwd()>/<agent_name>_messages.json" is

used.

openai_4_1_mini <- ellmer::chat(

name = "openai/gpt-4.1-mini",

credentials = retrieve_open_ai_credential,

echo = "none"

)

agent <- Agent$new(

name = "session_agent",

instruction = "You are a persistent researcher.",

llm_object = openai_4_1_mini

)

# Interact with the agent

agent$invoke("Tell me something interesting about volcanoes.")

# Save the conversation

agent$export_messages_history("volcano_session.json")

# ...Later, or in a new session...

# Restore the conversation

agent$load_messages_history("volcano_session.json")

# agent$messages # Displays current historyUse update_instruction(new_instruction) to change the

Agent’s system prompt mid-session. The first system message and the

underlying ellmer system prompt are both updated.

openai_4_1_mini <- ellmer::chat(

name = "openai/gpt-4.1-mini",

credentials = retrieve_open_ai_credential,

echo = "none"

)

agent <- Agent$new(

name = "reconfigurable",

instruction = "You are a helpful assistant.",

llm_object = openai_4_1_mini

)

agent$update_instruction("You are a strictly concise assistant. Answer in one sentence.")

#> ✔ Instruction successfully updated

#> ℹ Old: You are a helpful assistant....

#> ℹ New: You are a strictly concise assistant. Answer in on...

agent$messages

#> [[1]]

#> [[1]]$role

#> [1] "system"

#>

#> [[1]]$content

#> [1] "You are a strictly concise assistant. Answer in one sentence."You can limit how much an Agent is allowed to spend and

decide what should happen as the budget is approached or exceeded. Use

set_budget() to define the maximum spend (in USD), and

set_budget_policy() to control warnings and over-budget

behavior.

"abort",

"warn", or "ask".

0.8).# An API KEY is required to invoke the Agent

openai_4_1_mini <- ellmer::chat(

name = "openai/gpt-4.1-mini",

credentials = retrieve_open_ai_credential,

echo = "none"

)

agent <- Agent$new(

name = "cost_conscious_assistant",

instruction = "Answer succinctly.",

llm_object = openai_4_1_mini

)

# Set a 5 USD budget

agent$set_budget(5)

#> ✔ Budget successfully set to 5$

#> ℹ Budget policy: on_exceed='abort', warn_at=0.8

#> ℹ Use the set_budget_policy() method to configure the budget policy.

# Warn at 90% of the budget and ask what to do if exceeded

agent$set_budget_policy(on_exceed = "ask", warn_at = 0.9)

#> ✔ Budget policy set: on_exceed='ask', warn_at=0.9

# Normal usage

agent$invoke("Give me a one-sentence fun fact about Algeria.")

#> Algeria is home to the Sahara Desert’s ancient Tassili n’Ajjer rock art,

#> featuring some of the oldest known prehistoric cave paintings in the world!The current policy is echoed when setting the budget. You can update the policy at any time before or during an interaction lifecycle to adapt to your workflow’s tolerance for cost overruns.

Call get_usage_stats() to retrieve total tokens,

estimated cost, and budget information (if set).

stats <- agent$get_usage_stats()

stats

#> $estimated_cost

#> [1] 1e-04

#>

#> $budget

#> [1] 5

#>

#> $budget_remaining

#> [1] 4.9999generate_execute_r_code() lets an Agent

translate a natural-language task description into R code, optionally

validate its syntax, and (optionally) execute it.

TRUE to run a syntax

validation step on the generated code first.TRUE to execute the generated

code (requires successful validation).TRUE, shows the code

and asks for confirmation before executing.execute = TRUE (default globalenv()).Safety notes: - Set validate = TRUE and review the

printed code before execution. - Keep interactive = TRUE to

require an explicit confirmation before running code.

openai_4_1_mini <- ellmer::chat(

name = "openai/gpt-4.1-mini",

credentials = retrieve_open_ai_credential,

echo = "none"

)

r_assistant <- Agent$new(

name = "R Code Assistant",

instruction = "You are an expert R programmer.",

llm_object = openai_4_1_mini

)

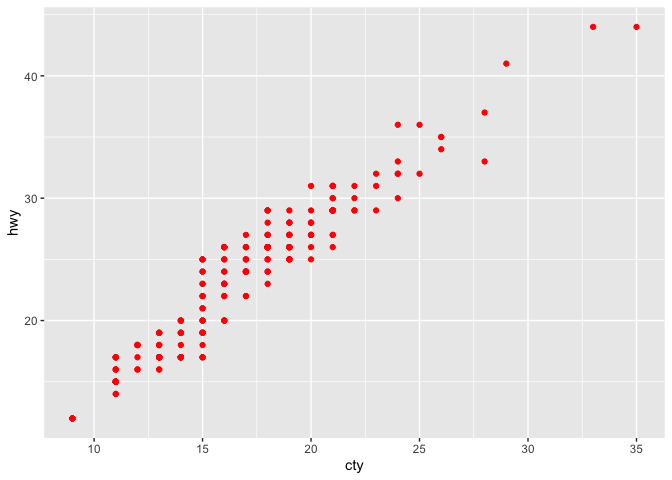

agent$generate_execute_r_code(

code_description = "using ggplot2, generate a scatterplot of hwy and cty in red",

validate = TRUE,

execute = TRUE,

interactive = FALSE

)

#> ℹ Executing generated R code...

#> ✔ Code executed successfully

#> $description

#> [1] "using ggplot2, generate a scatterplot of hwy and cty in red"

#>

#> $code

#> library(ggplot2);ggplot(mpg,aes(x=cty,y=hwy))+geom_point(color="red")

#>

#> $validated

#> [1] TRUE

#>

#> $validation_message

#> [1] "Syntax is valid"

#>

#> $executed

#> [1] TRUE

#>

#> $execution_result

#> $execution_result$value

#>

#> $execution_result$output

#> character(0)We can create as many Agents as we want, the LeadAgent

will dispatch the instructions to the agents and provide with the final

answer back. Let’s create three Agents, a researcher, a

summarizer and a translator:

researcher <- Agent$new(

name = "researcher",

instruction = "You are a research assistant. Your job is to answer factual questions with detailed and accurate information. Do not answer with more than 2 lines",

llm_object = openai_4_1_mini

)

summarizer <- Agent$new(

name = "summarizer",

instruction = "You are agent designed to summarise a give text into 3 distinct bullet points.",

llm_object = openai_4_1_mini

)

translator <- Agent$new(

name = "translator",

instruction = "Your role is to translate a text from English to German",

llm_object = openai_4_1_mini

)Now, the most important part is to create a

LeadAgent:

lead_agent <- LeadAgent$new(

name = "Leader",

llm_object = openai_4_1_mini

)Note that the LeadAgent cannot receive an

instruction as it has already the necessary

instructions.

Next, we need to assign the Agents to LeadAgent, we do

it as follows:

lead_agent$register_agents(c(researcher, summarizer, translator))

#> ✔ Agent(s) successfully registered.

lapply(lead_agent$agents, function(x) {x$name})

#> [[1]]

#> [1] "researcher"

#>

#> [[2]]

#> [1] "summarizer"

#>

#> [[3]]

#> [1] "translator"Before executing your prompt, you can ask the LeadAgent

to generate a plan so that you can see which Agent will be

used for which prompt, you can do it as follows:

prompt_to_execute <- "Tell me about the economic situation in Algeria, summarize it in 3 bullet points, then translate it into German."

plan <- lead_agent$generate_plan(prompt_to_execute)

#> ✔ Plan successfully generated.

plan

#> [[1]]

#> [[1]]$agent_id

#> 422d6a82-6288-4abc-8073-c5990506c071

#>

#> [[1]]$agent_name

#> [1] "researcher"

#>

#> [[1]]$model_provider

#> [1] "OpenAI"

#>

#> [[1]]$model_name

#> [1] "gpt-4.1-mini"

#>

#> [[1]]$prompt

#> [1] "Gather current information on Algeria's economic situation, including key indicators such as GDP growth, main industries, and challenges"

#>

#>

#> [[2]]

#> [[2]]$agent_id

#> abe3d4be-f0d9-42ec-a6b9-e8dba32d8bfc

#>

#> [[2]]$agent_name

#> [1] "summarizer"

#>

#> [[2]]$model_provider

#> [1] "OpenAI"

#>

#> [[2]]$model_name

#> [1] "gpt-4.1-mini"

#>

#> [[2]]$prompt

#> [1] "Summarize the economic situation in Algeria into 3 concise bullet points in English"

#>

#>

#> [[3]]

#> [[3]]$agent_id

#> a3faa6d7-4f81-4224-b70e-ae8f7e7a40ab

#>

#> [[3]]$agent_name

#> [1] "translator"

#>

#> [[3]]$model_provider

#> [1] "OpenAI"

#>

#> [[3]]$model_name

#> [1] "gpt-4.1-mini"

#>

#> [[3]]$prompt

#> [1] "Translate the 3 bullet points from English into German"Now, in order now to execute the workflow, we just need to call the

invoke method which will behind the scene delegate the

prompts to suitable Agents and retrieve back the final information:

response <- lead_agent$invoke("Tell me about the economic situation in Algeria, summarize it in 3 bullet points, then translate it into German.")

#>

#> ── Using existing plan ──

#> response

#> - Die algerische Wirtschaft wächst moderat mit 2-3 % jährlich und ist stark von

#> Kohlenwasserstoffen abhängig, die über 90 % der Exporte ausmachen.

#> - Wichtige Sektoren sind Öl und Gas, Landwirtschaft und verarbeitendes Gewerbe.

#>

#> - Zu den großen Herausforderungen zählen die wirtschaftliche Diversifizierung,

#> hohe Arbeitslosigkeit, die Abhängigkeit von schwankenden Ölpreisen und

#> gesellschaftliche Forderungen nach Reformen.If you want to inspect the multi-agents orchestration, you have

access to the agents_interaction object:

lead_agent$agents_interaction

#> [[1]]

#> [[1]]$agent_id

#> 422d6a82-6288-4abc-8073-c5990506c071

#>

#> [[1]]$agent_name

#> [1] "researcher"

#>

#> [[1]]$model_provider

#> [1] "OpenAI"

#>

#> [[1]]$model_name

#> [1] "gpt-4.1-mini"

#>

#> [[1]]$prompt

#> [1] "Gather current information on Algeria's economic situation, including key indicators such as GDP growth, main industries, and challenges"

#>

#> [[1]]$response

#> As of 2024, Algeria's GDP growth is moderate, estimated around 2-3% annually,

#> driven mainly by hydrocarbons which constitute over 90% of exports; key

#> industries include oil and gas, agriculture, and manufacturing. Challenges

#> include economic diversification, high unemployment, reliance on volatile oil

#> revenues, and social pressures for reforms.

#>

#> [[1]]$edited_by_hitl

#> [1] FALSE

#>

#>

#> [[2]]

#> [[2]]$agent_id

#> abe3d4be-f0d9-42ec-a6b9-e8dba32d8bfc

#>

#> [[2]]$agent_name

#> [1] "summarizer"

#>

#> [[2]]$model_provider

#> [1] "OpenAI"

#>

#> [[2]]$model_name

#> [1] "gpt-4.1-mini"

#>

#> [[2]]$prompt

#> [1] "Summarize the economic situation in Algeria into 3 concise bullet points in English"

#>

#> [[2]]$response

#> - Algeria's economy grows moderately at 2-3% annually, heavily reliant on

#> hydrocarbons that make up over 90% of exports.

#> - Key sectors include oil and gas, agriculture, and manufacturing.

#> - Major challenges are economic diversification, high unemployment, dependence

#> on fluctuating oil prices, and social demands for reform.

#>

#> [[2]]$edited_by_hitl

#> [1] FALSE

#>

#>

#> [[3]]

#> [[3]]$agent_id

#> a3faa6d7-4f81-4224-b70e-ae8f7e7a40ab

#>

#> [[3]]$agent_name

#> [1] "translator"

#>

#> [[3]]$model_provider

#> [1] "OpenAI"

#>

#> [[3]]$model_name

#> [1] "gpt-4.1-mini"

#>

#> [[3]]$prompt

#> [1] "Translate the 3 bullet points from English into German"

#>

#> [[3]]$response

#> - Die algerische Wirtschaft wächst moderat mit 2-3 % jährlich und ist stark von

#> Kohlenwasserstoffen abhängig, die über 90 % der Exporte ausmachen.

#> - Wichtige Sektoren sind Öl und Gas, Landwirtschaft und verarbeitendes Gewerbe.

#>

#> - Zu den großen Herausforderungen zählen die wirtschaftliche Diversifizierung,

#> hohe Arbeitslosigkeit, die Abhängigkeit von schwankenden Ölpreisen und

#> gesellschaftliche Forderungen nach Reformen.

#>

#> [[3]]$edited_by_hitl

#> [1] FALSEThe above example is extremely simple, the usefulness of

mini007 would shine in more complex processes where a

multi-agent sequential orchestration has a higher value added.

visualize_plan()Sometimes, before running your workflow, it is helpful to view the

orchestration as a visual diagram, showing the sequence of agents and

which prompt each will receive. After generating a plan, you can call

visualize_plan():

This function displays the agents in workflow order as labeled boxes.

Hovering a box reveals the delegated prompt. The visualization uses the

DiagrammeR package. If no plan exists, it asks you to

generate one first.

lead_agent$visualize_plan()If you want to compare several LLM models, the

LeadAgent provides a broadcast method that

allows you to send a prompt to several different agents and get the

result for each agent back in order to make a comparison and potentially

choose the best agent/model for the defined prompt:

Let’s go through an example:

openai_4_1 <- ellmer::chat(

name = "openai/gpt-4.1",

credentials = retrieve_open_ai_credential,

echo = "none"

)

openai_4_1_agent <- Agent$new(

name = "openai_4_1_agent",

instruction = "You are an AI assistant. Answer in 1 sentence max.",

llm_object = openai_4_1

)

openai_4_1_nano <- ellmer::chat(

name = "openai/gpt-4.1-nano",

credentials = retrieve_open_ai_credential,

echo = "none"

)

openai_4_1_nano_agent <- Agent$new(

name = "openai_4_1_nano_agent",

instruction = "You are an AI assistant. Answer in 1 sentence max.",

llm_object = openai_4_1_nano

)

lead_agent$clear_agents() # removing previous agents

lead_agent$register_agents(c(openai_4_1_agent, openai_4_1_nano_agent))

#> ✔ Agent(s) successfully registered.lead_agent$broadcast(prompt = "If I were Algerian, which song would I like to sing when running under the rain? how about a flower?")

#> [[1]]

#> [[1]]$agent_id

#> [1] "55d5ae2c-76b0-4ef2-a67f-15d699c1a862"

#>

#> [[1]]$agent_name

#> [1] "openai_4_1_agent"

#>

#> [[1]]$model_provider

#> [1] "OpenAI"

#>

#> [[1]]$model_name

#> [1] "gpt-4.1"

#>

#> [[1]]$response

#> As an Algerian, you might enjoy singing "Ya Rayah" while running under the

#> rain, and if you were a flower, you might prefer quietly soaking in the rain's

#> nourishment instead of singing.

#>

#>

#> [[2]]

#> [[2]]$agent_id

#> [1] "70bdac96-3bcb-4189-bec7-67dc1156257c"

#>

#> [[2]]$agent_name

#> [1] "openai_4_1_nano_agent"

#>

#> [[2]]$model_provider

#> [1] "OpenAI"

#>

#> [[2]]$model_name

#> [1] "gpt-4.1-nano"

#>

#> [[2]]$response

#> You might enjoy singing "Lila" by Cheb Khaled when running under the rain, and

#> "Ya Rayah" for a flower, reflecting Algerian music and culture.You can also access the history of the broadcasting

using the broadcast_history attribute:

lead_agent$broadcast_history

#> [[1]]

#> [[1]]$prompt

#> [1] "If I were Algerian, which song would I like to sing when running under the rain? how about a flower?"

#>

#> [[1]]$responses

#> [[1]]$responses[[1]]

#> [[1]]$responses[[1]]$agent_id

#> [1] "55d5ae2c-76b0-4ef2-a67f-15d699c1a862"

#>

#> [[1]]$responses[[1]]$agent_name

#> [1] "openai_4_1_agent"

#>

#> [[1]]$responses[[1]]$model_provider

#> [1] "OpenAI"

#>

#> [[1]]$responses[[1]]$model_name

#> [1] "gpt-4.1"

#>

#> [[1]]$responses[[1]]$response

#> As an Algerian, you might enjoy singing "Ya Rayah" while running under the

#> rain, and if you were a flower, you might prefer quietly soaking in the rain's

#> nourishment instead of singing.

#>

#>

#> [[1]]$responses[[2]]

#> [[1]]$responses[[2]]$agent_id

#> [1] "70bdac96-3bcb-4189-bec7-67dc1156257c"

#>

#> [[1]]$responses[[2]]$agent_name

#> [1] "openai_4_1_nano_agent"

#>

#> [[1]]$responses[[2]]$model_provider

#> [1] "OpenAI"

#>

#> [[1]]$responses[[2]]$model_name

#> [1] "gpt-4.1-nano"

#>

#> [[1]]$responses[[2]]$response

#> You might enjoy singing "Lila" by Cheb Khaled when running under the rain, and

#> "Ya Rayah" for a flower, reflecting Algerian music and culture.When executing an LLM workflow that relies on many steps, you can set

Human In The Loop (HITL) trigger that will

check the model’s response at a specific step. You can define a

HITL trigger after defining a LeadAgent as

follows:

openai_llm_object <- ellmer::chat(

name = "openai/gpt-4.1-mini",

credentials = retrieve_open_ai_credential,

echo = "none"

)

lead_agent <- LeadAgent$new(

name = "Leader",

llm_object = openai_llm_object

)

lead_agent$set_hitl(steps = 1)

#> ✔ HITL successfully set at step(s) 1.

lead_agent$hitl_steps

#> [1] 1After setting the HITL to step 1, the workflow execution

will pose and give the user 3 choices:

Note that you can set a HITL at several steps, for

example lead_agent$set_hitl(steps = c(1, 2)) will set the

HITL at step 1 and step 2.

Sometimes you want to send a prompt to several agents and pick the

best answer. In order to choose the best prompt, you can also rely on

the Lead Agent which will act a dudge and pick for you the

best answer. You can use the judge_and_choose_best_response

method as follows:

openai_4_1 <- ellmer::chat(

name = "openai/gpt-4.1",

credentials = retrieve_open_ai_credential,

echo = "none"

)

stylist_1 <- Agent$new(

name = "stylist",

instruction = "You are an AI assistant. Answer in 1 sentence max.",

llm_object = openai_4_1

)

openai_4_1_nano <- ellmer::chat(

name = "openai/gpt-4.1-nano",

credentials = retrieve_open_ai_credential,

echo = "none"

)

stylist_2 <- Agent$new(

name = "stylist2",

instruction = "You are an AI assistant. Answer in 1 sentence max.",

llm_object = openai_4_1_nano

)

openai_4_1_mini <- ellmer::chat(

name = "openai/gpt-4.1-mini",

credentials = retrieve_open_ai_credential,

echo = "none"

)

stylist_lead_agent <- LeadAgent$new(

name = "Stylist Leader",

llm_object = openai_4_1_mini

)

stylist_lead_agent$register_agents(c(stylist_1, stylist_2))

#> ✔ Agent(s) successfully registered.

best_answer <- stylist_lead_agent$judge_and_choose_best_response(

"what's the best way to wear a blue kalvin klein shirt in winter with a pink pair of trousers?"

)

best_answer

#> $proposals

#> $proposals[[1]]

#> $proposals[[1]]$agent_id

#> [1] "d6a6f683-eeb5-4e0d-af35-1d5b08595591"

#>

#> $proposals[[1]]$agent_name

#> [1] "stylist"

#>

#> $proposals[[1]]$response

#> Layer the blue Calvin Klein shirt under a neutral or grey wool coat, add a

#> scarf in a complementary color (like navy or blush), and wear stylish boots to

#> balance the look with the pink trousers.

#>

#>

#> $proposals[[2]]

#> $proposals[[2]]$agent_id

#> [1] "ef02563c-6c9d-460a-833c-bbd3b68a0316"

#>

#> $proposals[[2]]$agent_name

#> [1] "stylist2"

#>

#> $proposals[[2]]$response

#> Layer the blue Calvin Klein shirt with a neutral-colored blazer or cardigan and

#> pair it with a warm coat, while adding accessories like a scarf to complement

#> the pink trousers for a stylish winter look.

#>

#>

#>

#> $chosen_response

#> Layer the blue Calvin Klein shirt under a neutral or grey wool coat, add a

#> scarf in a complementary color (like navy or blush), and wear stylish boots to

#> balance the look with the pink trousers.Please note that the mini007 project is released with a Contributor Code of Conduct. By contributing to this project, you agree to abide by its terms.